What is Robots.txt in SEO?

In the intricate world of search engine optimization (SEO), the robots.txt file emerges as a critical tool for website owners to communicate with search engine crawlers. Let’s delve into what robots.txt entails, its importance in SEO, and how to leverage it effectively to enhance your website’s performance.

Understanding Robots.txt

Definition and Purpose

Robots.txt is a text file located in the root directory of a website that instructs search engine crawlers on how to crawl and index its pages. It serves as a roadmap for search engine bots, informing them which areas of the site are open for crawling and which should be excluded.

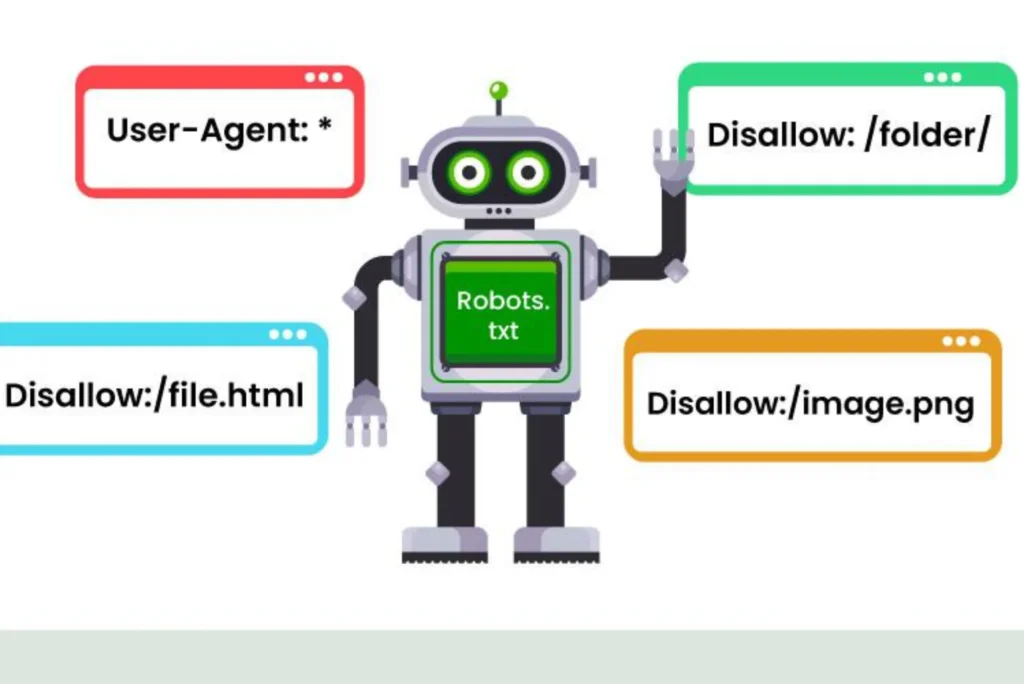

Syntax and Directives

The robots.txt file consists of directives, each specifying instructions for a particular group of crawlers. The most common directives include:

- User-agent: Specifies the crawler to which the following directives apply (e.g., Googlebot, Bingbot).

- Disallow: Indicates the URLs or directories that crawlers should not access.

- Allow: Grants permission for crawlers to access specific URLs or directories.

- Sitemap: Points to the XML sitemap file containing a list of URLs on the site.

Importance of Robots.txt in SEO

Controlling Crawl Budget

Robots.txt plays a crucial role in managing crawl budget, which refers to the number of pages search engines are willing to crawl and index on a website within a given timeframe. What is Robots.txt in SEO By excluding irrelevant or low-value pages from crawling, website owners can ensure that search engine bots focus on indexing essential content, thereby optimizing crawl budget allocation.

Preventing Indexation of Sensitive Content

Certain pages or directories on a website may contain sensitive information or duplicate content that should not be indexed by search engines. Robots.txt enables website owners to block access to such content, preserving the integrity of their site and preventing potential duplicate content issues.

Enhancing SEO Performance

Effectively utilizing robots.txt can contribute to improved SEO performance by ensuring that search engine crawlers prioritize crawling and indexing of high-quality, relevant content. By directing bots away from non-essential pages or duplicate content, website owners can enhance the visibility and ranking potential of their most valuable pages in search results.

Best Practices for Robots.txt Usage

Use Disallow Sparingly

While disallowing certain pages or directories can be beneficial, excessive use of the disallow directive may inadvertently block access to essential content, impacting SEO performance. Exercise caution and only disallow content that is truly irrelevant or sensitive.

Regularly Monitor and Update

As websites evolve and content structures change, it’s essential to regularly review and update the robots.txt file to ensure it accurately reflects the site’s current architecture and content priorities.

Test Changes Carefully

Before implementing significant changes to the robots.txt file, such as disallowing or allowing access to new sections of the site, conduct thorough testing to assess the potential impact on crawlability and indexation.

Utilize Robots Meta Tags

In addition to robots.txt directives, leverage robots meta tags within individual web pages to provide specific instructions to search engine crawlers regarding indexing and following links.

In the ever-evolving landscape of SEO, robots.txt remains a fundamental tool for website owners to influence search engine crawling and indexing behavior. By understanding its role, adhering to best practices, and leveraging it effectively, website owners can exert greater control over their site’s visibility and ultimately enhance their SEO performance.

SEO Course

Looking to master the art and science of search engine optimization (SEO)? Embark on a transformative journey with an SEO course tailored to both beginners and seasoned professionals. Dive deep into the intricacies of keyword research, on-page optimization, link building, and technical SEO under the guidance of industry experts. Whether you’re aiming to boost your website’s visibility, advance your career in digital marketing, or elevate your business’s online presence, an SEO course equips you with the knowledge, skills, and strategies needed to thrive in the competitive digital landscape. With practical insights, hands-on exercises, and real-world case studies, you’ll gain the expertise to navigate algorithm updates, optimize for mobile search, and drive sustainable organic traffic. Enroll in an SEO course today and unlock the secrets to ranking success!

Al Hidayah Typing Center

Al Hidayah Typing Center is a leading provider of professional typing services in [location]. Specializing in document preparation, data entry, and administrative support, Al Hidayah Typing Center caters to individuals and businesses in need of accurate and efficient typing solutions. With a team of experienced typists and a commitment to quality and customer satisfaction, Al Hidayah Typing Center has earned a reputation as a trusted partner for all typing needs. Whether it’s typing legal documents, filling out forms, or formatting reports, clients can rely on Al Hidayah Typing Center for prompt and reliable service.